Coursera: Machine Learning (Week 4) Quiz - Neural Networks: Representation| Andrew NG

Recommended Courses:

1.Neural Networks - Representation :

Don't just copy & paste for the sake of completion.The solutions uploaded here are only for reference.They are meant to unblock you if you get stuck somewhere.Make sure you understand first.

1.Which of the following statements are true? Check all that apply.

- Any logical function over binary-valued (0 or 1) inputs x1 and x2 can be (approximately) represented using some neural network.

- Suppose you have a multi-class classification problem with three classes, trained with a 3 layer network. Let

be the activation of the first output unit, and similarly

and

. Then for any input x, it must be the case that

.

- A two layer (one input layer, one output layer; no hidden layer) neural network can represent the XOR function.

- The activation values of the hidden units in a neural network, with the sigmoid activation function applied at every layer, are always in the range (0, 1).

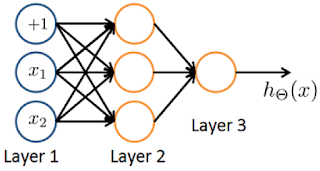

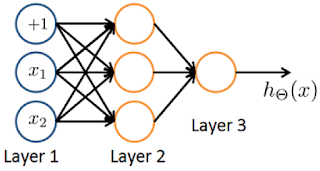

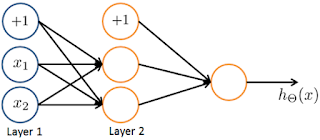

2. Consider the following neural network which takes two binary-valued inputs and outputs

. Which of the following logical functions does it (approximately) compute?

ANSWER:- 1

3.Consider the neural network given below. Which of the following equations correctly computes the activation ? Note:

is the sigmoid activation

function.

4.You have the following neural network:

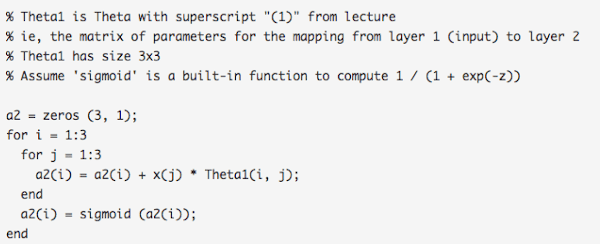

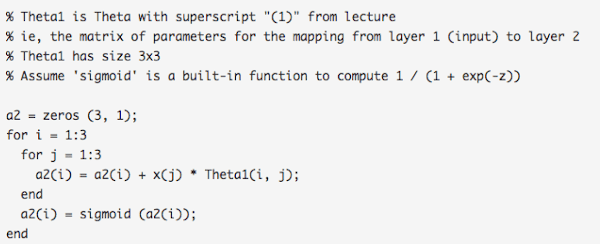

You’d like to compute the activations of the hidden layer }&space;\&space;\epsilon&space;\&space;R^3) . One way to do

. One way to do

so is the following Octave code:

so is the following Octave code:

You want to have a vectorized implementation of this (i.e., one that does not use for loops). Which of the following implementations correctly compute ? Check all

that apply.

that apply.

- z = Theta1 * x; a2 = sigmoid (z);

- a2 = sigmoid (x * Theta1);

- a2 = sigmoid (Theta2 * x);

- z = sigmoid(x); a2 = sigmoid (Theta1 * z);

- You are using the neural network pictured below and have learned the parameters

(used to compute

) and

(used to compute

as a function of

). Suppose you swap the parameters for the first hidden layer between its two units so

and also swap the output layer so

. How will this change the value of the output

?

- It will stay the same.

- It will increase.

- It will decrease

- Insufficient information to tell: it may increase or decrease.

- 一一一一一一一一一一一一一一一一一一一一一一一一一一一一一一一一一一一一一一一一一一一一一一

&Have no concerns to ask doubts in the comment section. I will give my best to answer it.If you find this helpful kindly comment and share the post.This is the simplest way to encourage me to keep doing such work.Thanks & Regards,- Wolf

Comments

Post a Comment